Configure CDP target for Hive metadata migrations

Hivemigrator can migrate metadata to a Hive or other metastore service in Apache Hadoop, operating as part of a Hadoop deployment. Metadata migrations make the metadata from a source environment available in a target environment, where tools like Apache Hive can query the data.

The following examples show how add Metadata Agents where an Override JDBC Connection Properties is required for migrating transactional, managed tables on Hive 3+ on CDP Hadoop clusters.

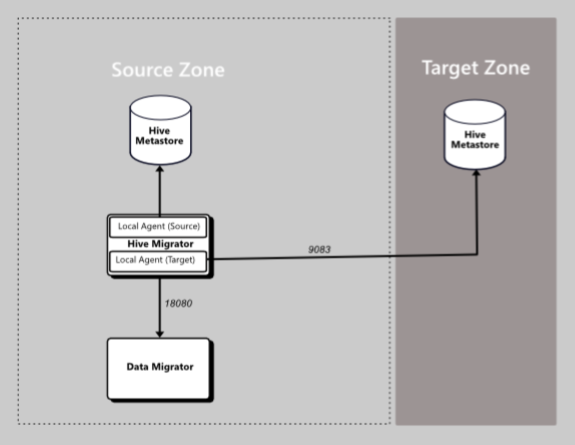

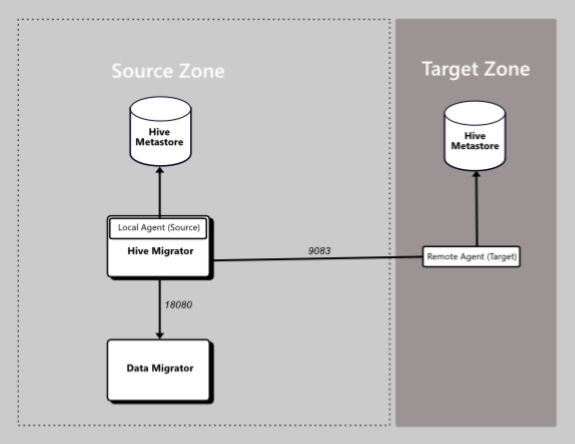

Remote or local agent

Data Migrator interacts with a metastore using a "Metadata Agent". Agents hold the information needed to communicate with metastores and allow metadata migrations to be defined in Data Migrator. Deploy each agent locally or remotely. Deploy a local agent on the host. A remote agent, runs as a separate service and can be deployed on a separate host, not running Data Migrator.

Deployment with local agents

Deployment with remote agents

Remote agents let you migrate metadata between different versions of Apache Hive. They also give you complete control over the network communication between source and target environments instead of relying on the network interfaces directly exposed by your metadata target.

For a list of agent types available for each supported platform, see Supported metadata agents.

Configure Hive agents with Override JDBC Connection Properties

Configure agents through your preferred interface: UI, Data Migrator CLI, or the REST API.

To add a Metadata Agent with a specific JDBC overide you will need:

- The correct JDBC connector for your Hive metastore's underlying database. This will be either MySQL or Postgres.

- The JDBC connection properties and credentials.

JDBC connectors

Hive agents need access to the JDBC driver/connector used to communicate with the Hive metastore's underlying database. For example, a Cloudera Data Platform environment typically uses MySQL or Postgres databases.

Download the appropriate JDBC driver/connector JAR file, MySQL or Postgres.

noteYou only need the JAR file, if you're asked to specify an OS, choose platform independent. If the download is provided as an archive, extract the JAR file from the archive.

Copy the JDBC driver JAR to

/opt/wandisco/hivemigrator/agent/hiveon your metadata agent host machine.Set the ownership of the file to the Hive Migrator system user and group for your Hivemigrator instance.

Examplechown {hive:hadoop} mysql*

Configure the CDP target with the UI

To configure a CDP target, when adding the Metastore Agent at the Override JDBC Connection Properties step:

Select Override JDBC Connection Properties to override the JDBC properties used to connect to the Hive metastore database.

Enter the Connection URL - JDBC URL for the database.

- Example: jdbc:postgresql://test.bdauto.cirata.com:7432/hive

- Example: jdbc:mysql://server1.cluster1.cirata.com:3306/hive

Enter the Connection Driver Name - Full class name of JDBC driver.

- Use either:

- com.mysql.jdbc.Driver

- org.postgresql.Driver

- Use either:

Enter the Connection Username - The username for your metastore database.

Enter the Connection Password - The password for your metastore database.

(Optional) - Enter Default Filesystem Override to override the default filesystem URI.

Select Save.

With redaction disabled you can get the required JDBC configuration using the following API call:

{clusterHost}:{clusterPort}/api/v19/clusters/{clusterName}/services/{serviceName}/config

abcd01-vm0.domain.name.com:7180/api/v19/clusters/ABCD-01/services/hive1/config

Configure the CDP target with the CLI

Use the hive agent add hive CLI command with the --jdbc-url, --jdbc-driver-name, --jdbc-username and --jdbc-password parameters to add the Metastore Agent with JDBC credentials.

hive agent add hive --name targetautoAgent5 --host test.cirata.com --port 5052 --no-ssl --jdbcUrl jdbc:postgresql://test.bdauto.cirata.com:7432/hive --jdbc-DriverName org.postgresql.Driver --jdbc-username admin --jdbc--password *** --file-system-id 'testfs'

For more information, see Command reference.

Guidance for javax.jdo.option.ConnectionDriverName

If you're using a MySQL database:

--jdbc-DriverNameiscom.mysql.jdbc.Driver- Port used is

3306 - Database type is

mysql

If you're using a Postgres database:

--jdbc-DriverNameisorg.postgresql.Driver- Port used is

7432 - Database is

postgresql

Next steps

If you have already added Metadata Rules, create a Metadata Migration using the Metadata Agent.

Optional: temporarily turn off redaction

If you don't know the password to connect to your Hive metastore, get it from your Hadoop environment. If your Hadoop platform redacts the password, follow the steps below on the node running your cluster manager:

Open

/etc/default/cloudera-scm-serverin a text editor.Edit the following line, changing "true" to "false". For example:

$ export CMF_JAVA_OPTS="$CMF_JAVA_OPTS -Dcom.cloudera.api.redaction=false"Save the change.

Restart the manager using the command:

$ sudo service cloudera-scm-server restartThis may take a few minutes.

Get the required JDBC configuration using the following API call:

{clusterHost}:{clusterPort}/api/v19/clusters/{clusterName}/services/{serviceName}/configFor example:

abcd01-vm0.domain.name.com:7180/api/v19/clusters/ABCD-01/services/hive1/configThe

hive_metastore_database_passwordwill no longer be redacted and be presented beside thevaluekey. For example:},{

"name" : "hive_metastore_database_password",

"value" : "The-true-password-value",

"sensitive" : true

}, {infoEnable redaction after you confirm your system's credentials